Unsurprisingly, one of the most frequently asked questions from clients and prospects over the past eighteen months went something along the lines of “Can candidate’s use ChatGPT, or similar Large Language Model AI to cheat their psychometric tests?”

Social media commentary seemed to lean towards a resounding ‘YES’ with some spectacular claims of outstanding scores and the end of psychometrics as we know it. With the Christmas/summer quiet period approaching, we decided to put these claims to the test, and with the Christmas break bringing an extremely IT savvy and smart daughter home for festivities, the opportunity was perfect.

Her mission was simple (& of course involved payment): “Do your absolute worst; cheat as hard as you can on a range of Ability Tests and see if you can’t swing a personality profile in your favour whilst you’re at it”

As ChatGPT & similar AI tools are constantly learning, we didn’t do any complete tests, but rather samples to avoid the risk of the AI suddenly doing better at the tests than it was before!

Using tests from two respected psychometrics publishers, here’s what we found:

Overall Observations at January 2024

- ChatGPT4 provides extremely mixed results as a cheating aid in completing psychometric ability tests.

- A first major disadvantage of relying on ChatGPT4 to do an ability test for you is the AI limiting the number of questions you can ask it to between 20 & 40 in one sitting, before not permitting any more questions for one to three hours. This would reduce its usefulness if it were being relied upon to complete a test battery of more than 20 items – which is most test batteries.

- A second major disadvantage is the time it takes ChatGPT4 to analyse any item before responding, which runs the risk of cheating candidates running out of time. This was particularly noticeable in Critical Reasoning tests, where ChatGPT4 was taking from 45 seconds to two and a half minutes to respond to each question.

- Whilst ChatGPT4 cannot read the pages of a test, anyone who is adept at using the snipping tool can quickly drop test items, including graphics and the task required into ChatGPT4 to be analysed. However, ChatGPT4’s capacity to read graphics was generally slow and frequently inaccurate.

- Questions can also be read out loud to ChapGPT4, although this requires all possible answers to also be read out loud, which again takes up time.

Ability Tests ChatGPT4 did well in

- General Numerical & Verbal Reasoning Tests. ChatGPT4 provided accurate answers, but typically took 23 – 46 seconds to answer each question, which would prevent a cheating test-taker from progressing far enough into most General Reasoning Tests to amass a significant score.

- Checking Tests, where participants are required to find exact matches to a range of options. ChatGPT4 was extremely accurate but taking way too long to enable candidates to amass a significant score in tests with typically 25 to 50 items and very short time limits of typically 4 or 5 minutes.

Ability Tests ChatGPT4 did poorly in

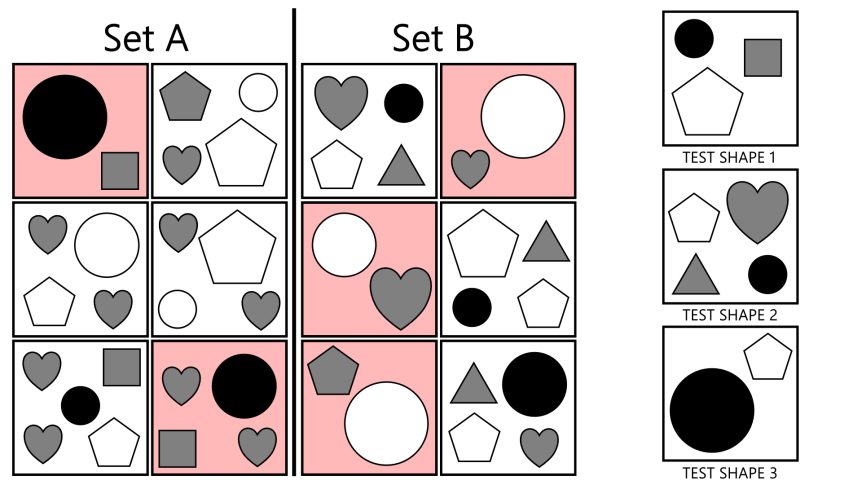

- Abstract Reasoning Tests. Everyone’s favourite ability tests where participants are presented with patterns and sequences. The images can be readily snipped & pasted into ChatGPT4, but whilst it could describe possible patterns, it failed entirely at reaching conclusions and didn’t seem to recognise that the boxes with possible answers were where to look for a conclusion.

- Mechanical Reasoning Tests. The same observations on why ChatGPT4 struggled with Abstract Reasoning Tests seemed to apply to Mechanical Reasoning Tests, which are similarly diagrammatic in the way they ask questions. ChatGPT4 presented arguably correct interpretations of the task, but not answers that were one of the options available. It also took a long time to get there; typically upwards of a minute per question.

- Critical Numerical Reasoning. Questions involving graphs and tables of data slowed ChatGPT4 down dramatically. The shortest response time was 46 seconds and the longest 2 minutes & 34 seconds; both resulting in incorrect/unusable answers. Again, ChatGPT4 didn’t recognise the answer option boxes as the place it should select an answer from.

- Critical Verbal Reasoning. Tasks requiring participants to answer questions about passages of text; typically, whether statements are True, False or Cannot be determined. ChatGPT4 was reasonably accurate and faster than in Critical Numerical tests, but still took 45 – 60 seconds to recommend an answer, with the explanation of its suggestion often presented in a lengthy paragraph, further extending the time required for a cheater to respond.

- On the Critical Reasoning, Abstract & Mechanical Reasoning Tests, ChatGPT did not seem to recognise the boxes with the possible answers as where the answer would be found; rather it would attempt to give answers from its own workings, which were often none of the options available as an answer.

Cheating a Big-5 Personality Questionnaire

- Being limited to 20 – 40 questions in any 3 hour session would restrict how someone could hope to use ChatGPT4 to answer a personality questionnaire with 100 to 200 items

- However, we tried by asking ChatGPT4 to make us look like an empathetic manager, then a great sales person

- ChatGPT4 gave credible suggestions when asked how to respond to questionnaire items in a way that would make the respondent look like an empathetic manager or great sales person, although it would be fair to say that most people would recognise such items themselves.

- We didn’t respond to a whole questionnaire so couldn’t see whether Impression Management measures are affected by using AI to answer a personality questionnaire.

Conclusions as at January 2024

All these Large Language Model AI tools are evolving, so these conclusion needs a time-stamp of when they were made as they may not be true even later this year.

However, from the perspective of a candidate determined to cheat their way through a psychometric ability test suite, AI is likely to cause as many headaches as benefits, mostly driven from the time it takes AI to come up with a possible answer compared to the test-taker just working it out themselves.

Getting a clever friend to do your test for you would seem a much better option.

Practical Suggestions for Employers, People & Culture/HR & Hiring Managers

- AI hasn’t killed Psychometrics. But it has changed the landscape in terms of how candidates might approach taking tests, which you need to be aware of and respond to

- New risks to Candidates and Employers. What if perfectly capable candidates use AI and get crappy scores way below what they could have achieved themselves? Do you reject capable people and they lose out on a job they were capable of doing?

- Sharpen Pre-Employment Communications. Let your candidates know that AI is an unreliable source of help for completing testing and assessment in your recruitment communications.

- Stick to Timed Tests. There was a trend towards untimed ability tests in recent years, with several publishers releasing untimed versions of their General and Critical Reasoning Tests. Time limits are clearly the biggest disadvantage to using AI to cheat a test, so stick to the timed versions.

- Look for Slow but Accurate General Reasoning Test Scores. Where candidates have not progressed far into a timed test, but have high accuracy rates, probe a little further at interview. Some people achieve these scores entirely honestly because that’s how they solve problems, but some might have been using AI to assist.

- Clever Friends. Getting assistance from clever friends when completing online tests remains a far greater risk to the integrity of your selection process. Look for ways to minimise that risk through interventions such as Proctoring, running tests onsite where you can or advising candidates that they will be re-tested as part of their induction.